Difference between revisions of "YOLO algorithm"

(Created page with "==What is YOLO and why is it so revolutionary?== YOLO (you only look once) is a real-time object detection algorithm originally proposed in [https://arxiv.org/pdf/1506.02640.p...") |

(→What is YOLO and why is it so revolutionary?) |

||

| Line 2: | Line 2: | ||

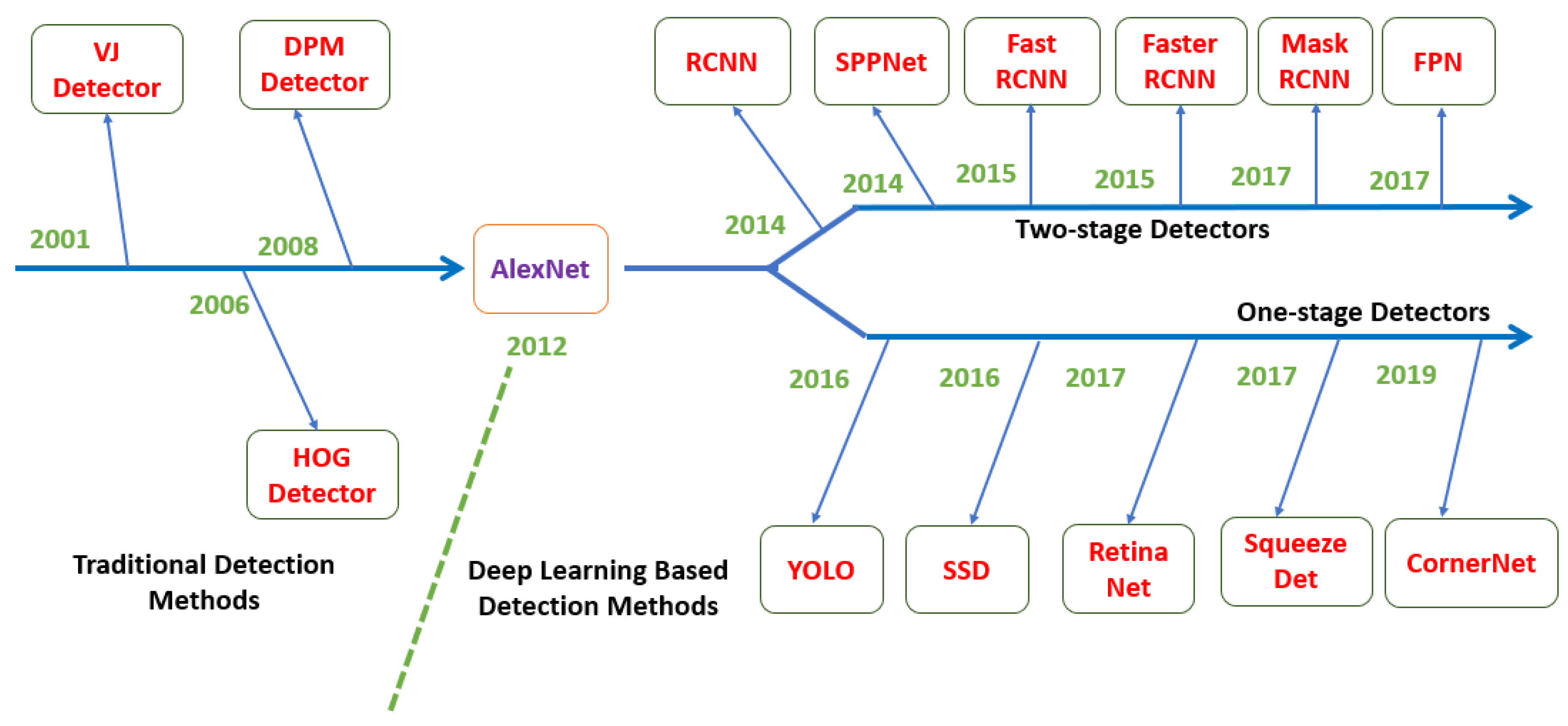

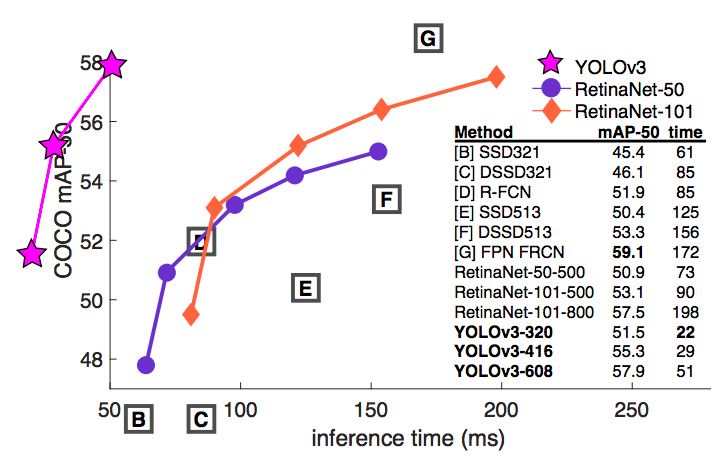

YOLO (you only look once) is a real-time object detection algorithm originally proposed in [https://arxiv.org/pdf/1506.02640.pdf Redmon et al. 2015]. One of the ways the YOLO algorithm revolutionized the field of object-detection is that it was the first algorithm that implemented a 1-look approach to object detection. In the case of multi class object detection, instead of running an algorithm once for each class, YOLO performs a single pass over the image and generates all of the bounding boxes for detected objects. The ability to produce predictions after a single pass over the image allowed YOLO to be much faster than other state-of-the-art algorithms in 2015. Below are a timeline showing how YOLO gave rise to the class of one-look algorithms (left) and a plot showing YOLO's performance and inference time plotted against other state-of-the-art algorithms at the time (right). | YOLO (you only look once) is a real-time object detection algorithm originally proposed in [https://arxiv.org/pdf/1506.02640.pdf Redmon et al. 2015]. One of the ways the YOLO algorithm revolutionized the field of object-detection is that it was the first algorithm that implemented a 1-look approach to object detection. In the case of multi class object detection, instead of running an algorithm once for each class, YOLO performs a single pass over the image and generates all of the bounding boxes for detected objects. The ability to produce predictions after a single pass over the image allowed YOLO to be much faster than other state-of-the-art algorithms in 2015. Below are a timeline showing how YOLO gave rise to the class of one-look algorithms (left) and a plot showing YOLO's performance and inference time plotted against other state-of-the-art algorithms at the time (right). | ||

| + | [[File:Timeline.png|thumb|485px|left|YOLO was the first first-look object detection algorithm and has given rise to a whole class of modern detection algorithms]] | ||

| + | [[File:Performance.png|thumb|485px|right|YOLO performance as compared to state-of-the-art at the time of publication]] | ||

==YOLO V1== | ==YOLO V1== | ||

Revision as of 19:28, 22 December 2020

What is YOLO and why is it so revolutionary?

YOLO (you only look once) is a real-time object detection algorithm originally proposed in Redmon et al. 2015. One of the ways the YOLO algorithm revolutionized the field of object-detection is that it was the first algorithm that implemented a 1-look approach to object detection. In the case of multi class object detection, instead of running an algorithm once for each class, YOLO performs a single pass over the image and generates all of the bounding boxes for detected objects. The ability to produce predictions after a single pass over the image allowed YOLO to be much faster than other state-of-the-art algorithms in 2015. Below are a timeline showing how YOLO gave rise to the class of one-look algorithms (left) and a plot showing YOLO's performance and inference time plotted against other state-of-the-art algorithms at the time (right).

YOLO V1

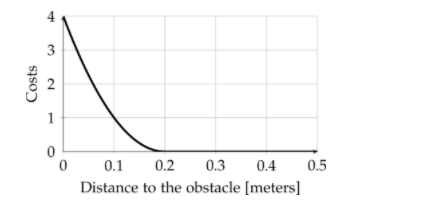

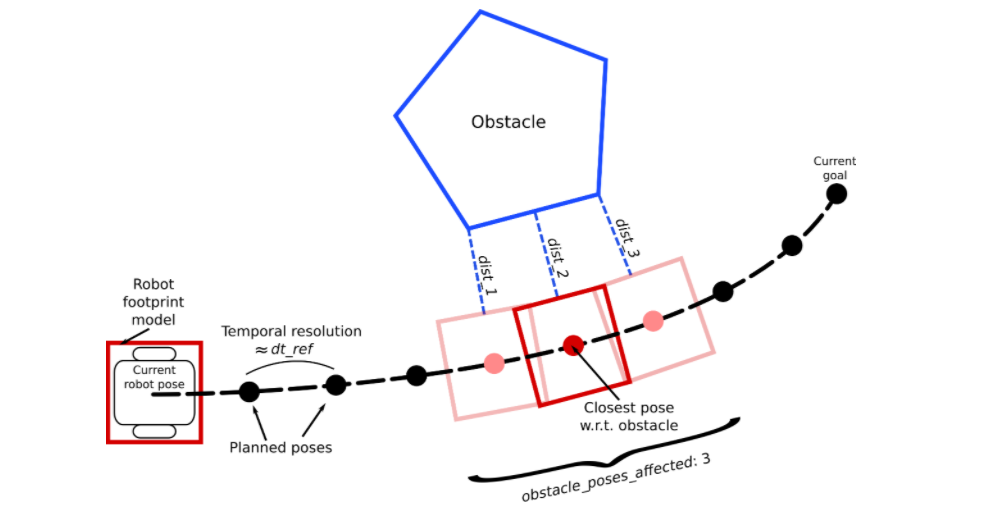

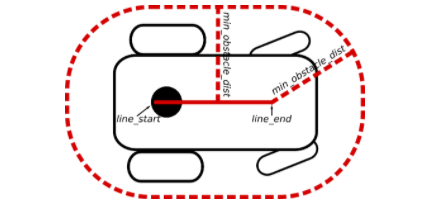

ROS also has a package for obstacle avoidance, http://wiki.ros.org/teb_local_planner/Tutorials/Obstacle%20Avoidance%20and%20Robot%20Footprint%20Model. It uses cost factors and optimization weights with the distance to the closest point on the obstacle. The Robot Footprint Model estimates the size of the robot (or bike) using different shapes: point, circular, line, two circles, and polygon. Polygon approximation requires extra computation time and would reduce the bike's ability to respond to the obstacle while draining its battery.